(I am part one of a series)

While the term assertion in a computing context has a pedigree stretching back to Turing, Goldstine and von Neuman, finding a good definition for a testing assertion is a little more challenging.

The world’s greatest citation repository for the lazy student has a plausible definition of a test assertion as being “an assertion, along with some form of identifier” but with uninspiring references to back it up.

Kent Beck’s seminal “Simple Smalltalk Testing: With Patterns” which begat SUnit, which begat xUnit, doesn’t mention assertions at all. Instead, he talks about Test Case methods on a testing object which raise catchable exceptions via the testing object.

“Unit Test Frameworks”, which covers xUnit-style testing but with a focus on Java and JUnit uses the term “test assert”:

Test conditions are checked with test assert methods. Test asserts result in test success or failure. If the assert fails, the test method returns immediately. If it succeeds, the execution of the test method continues.

As a proud Perl developer, I’m already aware that Perl’s testing assertions (examined in the next section) are more properly testing predicates than assertions – they have a boolean value and don’t raise exceptions on failure, and further predicates in any given method are tested even if preceding ones failed.

However, to simplify going forward, both testing predicates and testing assertions will be referred to under the banner of testing assertions.

Perl

Perl’s basic method of recording the result of a test assertion run is for the testing code to emit the string ok # or not ok # (where # is a test number) to STDOUT – this output is intended to be aggregated and parsed by the software running the test cases.

These basic strings form the basis of TAP – the Test Anything Protocol. TAP is “a simple text-based interface between testing modules in a test harness”, and both consumers and emitters of it exist for many programming languages (including Python and Ruby).

The simplest possible valid Perl testing assertion then is:

printf("%s %d - %s\n",

($test_condition ? 'ok' : 'not ok'),

$test_count++,

"Test description"

)Test::More – a wildly popular testing library that introduces basic testing assertions and is used by ~80% of all released Perl modules – describes its purpose as:

to print out either “ok #” or “not ok #” depending on if a given [test assertion] succeeded or failed”

Test::More provides ok() as its basic unit of assertion:

ok( $test_condition, "Test description" );And while we could use ok() to test string equality:

ok( $expected eq $actual, "String matches $expected" );Test::More builds on ok() to provide tests which “produce better diagnostics on failure … [and that] know what the test was and why it failed”. We can use is() to rewrite our assertion:

is( $actual, $expected, "String matches $expected" );And we’ll get detailed diagnostic output, also in TAP format:

not ok 1 - String matches Bar

# Failed test 'String matches Bar'

# at -e line 1.

# got: 'Foo'

# expected: 'Bar'Test::More ships with a variety of these extended equality assertions.

However, the Test::More documentation has not been entirely honest with us. A quick look at its source code shows nary a print statement, but instead a reliance on what appears to be a singleton object of the class Test::Builder, to which ok() and other testing assertions delegate. Test::Builder is described as:

a single, unified backend for any test library to use. This means two test libraries which both use Test::Builder can be used together in the same program

Which is an intriguing claim that we’ll come back to when looking at how assertions are extended.

As noted in the introduction, these are not truly assertions – exceptions are not raised on failure. The following code:

ok( 0, "Should be 1" );

ok( 1, "Should also be 1" );Will correctly flag that the first assertion failed, but then will continue to test the second one.

Python

Python provides a built-in assert function whose arguments closely resemble the aforementioned “assertion, along with some form of identifier”:

assert test_condition, "Test description"When an assertion is false, an exception of type AssertionError is raised associated with the string Test description. Uncaught, this will cause our Python test to exit with a non-zero status and a stack-trace printed to STDERR, like any other exception.

AssertionError has taken on a special signifying value for Python testing tools – virtually all of them will provide testing assertions which raise AssertionErrors, and will attempt to catch AssertionErrors, treating them as failures rather than exceptions. The unittest* documentation provides quite a nice description of this distinction:

If the test fails, an exception will be raised, and unittest will identify the test case as a failure. Any other exceptions will be treated as errors. This helps you identify where the problem is: failures are caused by incorrect results – a 5 where you expected a 6. Errors are caused by incorrect code – e.g., a TypeError caused by an incorrect function call.

Which is foreshadowed by Kent Beck’s explanation:

A failure is an anticipated problem. When you write tests, you check for expected results. If you get a different answer, that is a failure. An error is more catastrophic, a error condition you didn’t check for.

Like Test::More‘s ok(), there’s no default useful debugging information provided by assert other than what you provide. One can explicitly add it to the assert statement’s name, which is evaluated at run-time:

assert expected == actual, "%s != %s" % (repr(expected), repr(actual))However, evidence that assert was not really meant for software testing starts to emerge as one digs deeper. It’s stripped out when one is running the code in production mode, and there’s no built-in mechanism for seeing how many times assert was called – code runs that are devoid of any assert statement are indistinguishable from code in which every assert statement passed. This makes it an unsuitable building block for testing tools, despite its initial promise.

PyTest – a “mature full-featured Python testing tool” – deals with this by essentially turning assert in to a macro. Testing libraries imported by code running under PyTest will have their assert statements rewritten on the fly to produce testing code capable of useful diagnostics and instrumentation. Running:

assert expected == actual, "Test description"Using python directly gives us a simple error:

Traceback (most recent call last):

File "assert_test.py", line 4, in <module>

assert expected == actual, "Test description"

AssertionError: Test descriptionWhere running it under py.test gives us proper diagnostic output:

======================= ERRORS ========================

___________ ERROR collecting assert_test.py ___________

assert_test.py:4: in <module>

assert expected == actual, "Test description"

E AssertionError: Test description

E assert 'Foo' == 'Bar'

=============== 1 error in 0.01 seconds ===============unittest, an SUnit descendent that’s bundled as part of Python’s core library, provides its own functions that directly raise AssertionErrors. The basic unit is assertTrue:

self.assertTrue( testCondition )unittest‘s test assertions are meant to be run inside xUnit-style Test Cases which are run inside try/except blocks which will catch AssertionErrors. Amongst others, unittest provides an assertEqual:

self.assertEqual( expected, actual )Although surprisingly we’ve had to remove the test name, so that it’ll be automatically set to a useful diagnostic message:

Traceback (most recent call last):

File "test_fizzbuzz.py", line 11, in test_basics

self.assertEqual( expected, actual )

AssertionError: 'Foo' != 'Bar'Much as Perl unifies around TAP and Test::Builder, Python’s test tools unify around the raising of AssertionErrors. One notable practical difference between these approaches is that a single test assertion failure in Python will cause other test assertions in the same try/except scope not to be run – and in fact, not even acknowledged. Running:

assert 0, "Should be 1"

assert 1, "Should also be 1"Gives us no information at all about our second assertion. This keeps in line with Hamill in Unit Test Frameworks:

Since a test method should only test a single behavior, in most cases, each test method should only contain one test assert.

Ruby

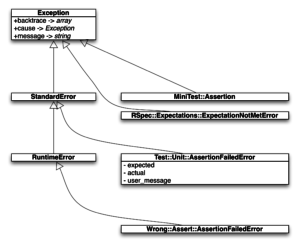

Ruby’s testing libraries are even less unified than Python’s, and as such there’s no shared central testing assertion mechanism. Instead, each popular testing library has its own exception classes that are raised to signal test failure.

This can perhaps best be illustrated by examining a library like wrong, which aims to provide testing assertions to be inside tests targeting the most common three testing libraries: RSpec, minitest and Test::Unit.

wrong in fact provides only one main testing assertion, assert, which accepts a block expected to evaluate to true; if it doesn’t, a Wrong::Assert::AssertionFailedError exception is raised:

assert { testing_condition }Rather than providing any extended diagnostic testing assertions, wrong‘s assert will introspect the block it was passed in case of a failure to produce a helpful message – an example from the wrong documentation:

x = 7; y = 10; assert { x == 7 && y == 11 }

==>

Expected ((x == 7) and (y == 11)), but

(x == 7) is true

x is 7

(y == 11) is false

y is 10wrong – used by itself – raises exceptions of class Wrong::Assert::AssertionFailedError. This is a subclass of RuntimeError, which is “raised when an invalid operation is attempted”, which in turn is a subclass of StandardError, which in turn is a subclass of Exception, the base exception class.

Exception has some useful methods you’d want from an exception class – a way of getting a stack trace (backtrace), a descriptive message (message), and a way of wrapping child exceptions (cause). RuntimeError and StandardError don’t specialize the class in anything but name, and thus simply provide meta-descriptions of exceptions.

Ruby requires us to raise exceptions that are descended from Exception. Errors that inherit from StandardError are intended to be ones that are to some degree expected, and thus caught and acted upon. As a result, rescue – Ruby’s catch mechanism – will catch these errors by default.

Those that don’t inherit from StandardError have to be explicitly matched, either by naming them directly, or by specifying that you want to catch absolutely every error.

Thus wrong considers its exceptions to be expected errors that should be caught. However, wrong also knows how to raise exceptions for the three major/most-popular testing libraries.

wrongs rspec adaptor will raise exceptions of type RSpec::Expectations::ExpectationNotMetError, which inherit directly from Exception, and comes with the explicit note in the code:

We subclass Exception so that in a stub implementation if the user sets an expectation, it can’t be caught in their code by a bare

rescue.

No structured diagnostic data is included in the exception – the diagnostics have been serialized to a string by the time they’re raised and are used as the Exception’s msg attribute. No additional helper methods are included directly in the exception class, either.

When wrong is used with minitest, its adaptor will have it raising instances of MiniTest::Assertion. Like RSpec‘s exception class, this also inherits directly from Exception, meaning it won’t get caught by default by rescue. While it does include a couple of helper methods, these are simply convenience methods for formatting the stack trace, and any diagnostic data is pre-serialized in to a string.

Finally, wrong will raise Test::Unit::AssertionFailedError exceptions when the right adaptor is used. These inherit from StandardError, so are philosophically “expected” errors. Of more interest is the availability of public attributes attached to these exceptions – expected and actual values are retained, as is the programmer’s description of the error. This allows for some more exciting possibilities for those who might wish to extend it – diagnostic information showing a line-by-line diff, language localization, or type-sensitive presentations, for example.

In any case, as per Python’s use of raised exceptions to signal test failure, some form of catcher is needed around any block containing these assertions. As raising an Exception will terminate execution of the current scope until it’s caught or rescued, only the first testing assertion in any given try/catch scope will actually run.

Ruby’s testing librarys’ assertions lack any form of built-in unification – as wrong shows, to write software integrating with several of them requires you to write explicit adaptors for them. As there’s no built-in assertion exception class (like there is in Python), different testing libraries have chosen different base classes to inherit from, and thus there’s no general way of distinguishing a test failure raised via a testing assertion from any other runtime error, short of having a hard-coded list of names of exception-class names from various libraries.

Summary

The effects of these differences will become more apparent as we look at ways of extending the testing assertions provided. In summary:

- Perl communicates the result of testing assertions by pushing formatted text out to a file-handle – appropriate for the Practical Extraction and Reportation Language. However, in practice this appears to be mostly managed by a Singleton instance of Test::Builder which the vast majority of Perl testing libraries make use of

- Python’s testing infrastructure appears to have evolved from overloading the use of a more generic

assertfunction which provides runtime assertions and raises exceptions of the classAssertionErroron failure. The use of a shared class between testing libraries for signaling test failure seems to allow a fair amount of interoperability - Ruby has several mutually incompatible libraries for performing testing, each of which essentially raises an Exception on test failure, but each of which has its own ideas about how that Exception should look, leading to an ecosystem where auxiliary testing modules need to have adaptors to work with each specific test system

I’m a strong advocate of Perl’s Test::Unit::TestCase, which provides true assertions (throws exceptions) and is extendable for writing your own assertions by subclassing from Test::Unit::TestCase. I don’t understand why this framework isn’t more widely used or spoken about and I welcome any comments.

What would be the advantage of using it over the more popular Test::Builder/TAP approach? What’s the benefit of throwing exceptions, per-se?

Good question. I’m wondering the same thing… and vice versa. It would be interesting to see a table of pros and cons for each framework. This blog seems to prefer assertions (maybe because they are more extensible?), but the details are left for a later post.

Maybe “strong advocate” wasn’t the right phrase for me to use. I enjoy working with Test::Unit::TestCase, but admittedly, I have little experience with Test::Builder, Test::More, and TAP.